GPDB 설치

GPDB

Preparation

templatePath: E:\vm\linux\template79

displayName: mdw

hostname: mdw.sky.local

path: E:\vm\linux\gpdb

description: mdw

ip: 192.168.181.231

numvcpus: 2

coresPerSocket: 2

memsize: 4096

---

templatePath: E:\vm\linux\template79

displayName: smdw

hostname: smdw.sky.local

path: F:\vm\linux\gpdb

description: smdw

ip: 192.168.181.232

numvcpus: 2

coresPerSocket: 2

memsize: 4096

---

templatePath: E:\vm\linux\template79

displayName: sdw1

hostname: sdw1.sky.local

path: E:\vm\linux\gpdb

description: sdw1

ip: 192.168.181.233

numvcpus: 2

coresPerSocket: 2

memsize: 4096

---

templatePath: E:\vm\linux\template79

displayName: sdw2

hostname: sdw2.sky.local

path: F:\vm\linux\gpdb

description: sdw2

ip: 192.168.181.234

numvcpus: 2

coresPerSocket: 2

memsize: 4096

---

templatePath: E:\vm\linux\template79

displayName: sdw3

hostname: sdw3.sky.local

path: E:\vm\linux\gpdb

description: sdw3

ip: 192.168.181.235

numvcpus: 2

coresPerSocket: 2

memsize: 4096

---

templatePath: E:\vm\linux\template79

displayName: sdw4

hostname: sdw4.sky.local

path: F:\vm\linux\gpdb

description: sdw4

ip: 192.168.181.236

numvcpus: 2

coresPerSocket: 2

memsize: 4096

java -jar E:\vm\CopyVMWare-1.1.0.jar `

--force `

--yaml E:\vm\conf\gpdb_hms.yaml

Configuring Your Systems

cat >> /etc/hosts <<EOF

# GPDB HMS

192.168.181.231 mdw.sky.local mdw

192.168.181.232 smdw.sky.local smdw

192.168.181.233 sdw1.sky.local sdw1

192.168.181.234 sdw2.sky.local sdw2

192.168.181.235 sdw3.sky.local sdw3

192.168.181.236 sdw4.sky.local sdw4

EOF

cat >> /etc/bashrc <<EOF

export JAVA_HOME=/usr/lib/jvm/java

export PATH=\${JAVA_HOME}/bin:\${PATH}

EOF

. /etc/bashrc

# yum install -y sshpass

# ssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa

# export SSHPASS="PASSWORD"

# for i in {1..1} ; do sshpass -e ssh -o StrictHostKeyChecking=no root@192.168.181.23${i} "mkdir -p ~/.ssh ; chmod 700 ~/.ssh ; touch ~/.ssh/authorized_keys ; echo '$(cat ~/.ssh/id_rsa.pub)' >> ~/.ssh/authorized_keys ; chmod 600 ~/.ssh/authorized_keys" ; done

# for i in {2..8} ; do sshpass -e ssh -o StrictHostKeyChecking=no root@192.168.181.23${i} "rm -rf ~/.ssh ; mkdir -p ~/.ssh ; chmod 700 ~/.ssh ; touch ~/.ssh/authorized_keys ; echo '$(cat ~/.ssh/id_rsa.pub)' >> ~/.ssh/authorized_keys ; chmod 600 ~/.ssh/authorized_keys ; echo SUCCESS" ; done

for i in {1..1} ; do echo mdw.sky.local ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo smdw.sky.local ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..4} ; do echo sdw${i}.sky.local ; done | xargs -P 5 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo mdw ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo smdw ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..4} ; do echo sdw${i} ; done | xargs -P 5 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/{bashrc,hosts} {}:/etc

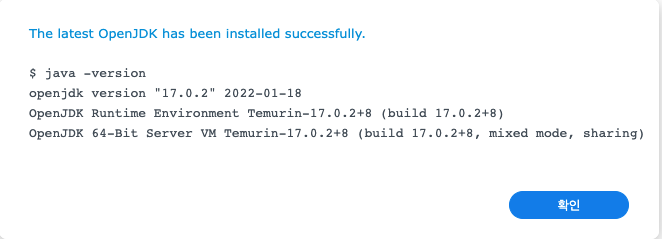

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 4 -I {} ssh {} "yum install -y net-tools gcc* git vim wget zip unzip tar curl dstat ntp java-1.8.0-openjdk-devel"

Disable or Configure Firewall Software

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 8 -I {} ssh {} "systemctl stop firewalld && systemctl disable firewalld"

Synchronizing System Clocks

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 8 -I {} ssh {} "systemctl enable ntpd ; systemctl start ntpd ; ntpq -p"

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 8 -I {} ssh {} "ntpq -p"

Setting Greenplum Environment Variables

cat >> ~/.bashrc << EOF

# 20220826 hskimsky for gpdb

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export PGDATABASE=gpadmin

export LD_PRELOAD=/lib64/libz.so.1 ps

EOF

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp ~/.bashrc {}:~

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 8 -I {} ssh {} "mkdir -p ~/Downloads/gpdb"

cd ~/Downloads/gpdb

wget https://github.com/greenplum-db/gpdb/releases/download/6.21.1/open-source-greenplum-db-6.21.1-rhel7-x86_64.rpm

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp ~/Downloads/gpdb/open-source-greenplum-db-6.21.1-rhel7-x86_64.rpm {}:~/Downloads/gpdb

Disable or Configure SELinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/selinux/config {}:/etc/selinux

Recommended OS Parameters Settings

The sysctl.conf File

cat >> /etc/sysctl.conf << EOF

# 20220826 for gpdb

# kernel.shmall = _PHYS_PAGES / 2 # See Shared Memory Pages

kernel.shmall = $(echo $(expr $(getconf _PHYS_PAGES) / 2))

# kernel.shmmax = kernel.shmall * PAGE_SIZE

kernel.shmmax = $(echo $(expr $(getconf _PHYS_PAGES) / 2 \* $(getconf PAGE_SIZE)))

kernel.shmmni = 4096

# See Segment Host Memory

vm.overcommit_memory = 2

vm.overcommit_ratio = 95

# See Port Settings

net.ipv4.ip_local_port_range = 10000 65535

kernel.sem = 250 2048000 200 8192

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 2097152

net.core.wmem_max = 2097152

vm.swappiness = 10

vm.zone_reclaim_mode = 0

vm.dirty_expire_centisecs = 500

vm.dirty_writeback_centisecs = 100

# memory 64GB 이상

# vm.dirty_background_ratio = 0

# vm.dirty_ratio = 0

# vm.dirty_background_bytes = 1610612736

# vm.dirty_bytes = 4294967296

# memory 64GB 미만

vm.dirty_background_ratio = 3

vm.dirty_ratio = 10

$(awk 'BEGIN {OFMT = "%.0f";} /MemTotal/ {print "vm.min_free_kbytes =", $2 * .03;}' /proc/meminfo)

EOF

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/sysctl.conf {}:/etc

System Resources Limits

cat >> /etc/security/limits.conf << EOF

# 20220827 hskimsky for gpdb

* soft nofile 524288

* hard nofile 524288

* soft nproc 131072

* hard nproc 131072

EOF

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/security/limits.conf {}:/etc/security

vim /etc/default/grub

...

GRUB_CMDLINE_LINUX="... transparent_hugepage=never"

...

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/default/grub {}:/etc/default

Creating the Greenplum Administrative User

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 -I {} ssh {} "groupadd gpadmin"

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 -I {} ssh {} "useradd gpadmin -r -m -g gpadmin"

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 -I {} ssh {} "echo 'changeme' | passwd gpadmin --stdin"

cat >> /etc/sudoers << EOF

# 20220827 for gpdb

gpadmin ALL=(ALL) NOPASSWD: ALL

EOF

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp /etc/sudoers {}:/etc

Installing the Greenplum Database Software

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 09-I {} ssh {} "yum install -y ~/Downloads/gpdb/open-source-greenplum-db-6.21.1-rhel7-x86_64.rpm"

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 -I {} ssh {} "chown -R gpadmin:gpadmin /usr/local/greenplum*"

for i in {1..6} ; do echo 192.168.181.23${i} ; done | xargs -P 6 -I {} ssh {} "chgrp -R gpadmin /usr/local/greenplum*"

Enabling Passwordless SSH

su - gpadmin

ssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa

export SSHPASS="changeme"

for i in {1..1} ; do sshpass -e ssh -o StrictHostKeyChecking=no 192.168.181.23${i} "mkdir -p ~/.ssh ; chmod 700 ~/.ssh ; touch ~/.ssh/authorized_keys ; echo '$(cat ~/.ssh/id_rsa.pub)' >> ~/.ssh/authorized_keys ; chmod 600 ~/.ssh/authorized_keys" ; done

for i in {2..6} ; do sshpass -e ssh -o StrictHostKeyChecking=no 192.168.181.23${i} "rm -rf ~/.ssh ; mkdir -p ~/.ssh ; chmod 700 ~/.ssh ; touch ~/.ssh/authorized_keys ; echo '$(cat ~/.ssh/id_rsa.pub)' >> ~/.ssh/authorized_keys ; chmod 600 ~/.ssh/authorized_keys ; echo SUCCESS" ; done

for i in {1..1} ; do echo mdw.sky.local ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo smdw.sky.local ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..4} ; do echo sdw${i}.sky.local ; done | xargs -P 5 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo mdw ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..1} ; do echo smdw ; done | xargs -P 2 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

for i in {1..4} ; do echo sdw${i} ; done | xargs -P 5 -I {} ssh {} -o StrictHostKeyChecking=no "hostname"

Setting Greenplum Environment Variables

cat >> ~/.bashrc << EOF

# 20220826 hskimsky for gpdb

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export PGDATABASE=gpadmin

export LD_PRELOAD=/lib64/libz.so.1 ps

EOF

for i in {2..6} ; do echo 192.168.181.23${i} ; done | xargs -P 7 -I {} scp ~/.bashrc {}:~

Confirming Your Installation

gpssh -f hostfile_exkeys -e 'ls -alF /usr/local/greenplum-db/greenplum_path.sh'

[gpadmin@mdw:~]$ gpssh -f hostfile_exkeys -e 'ls -alF /usr/local/greenplum-db/greenplum_path.sh'

[sdw1] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[sdw1] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[sdw3] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[sdw3] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[ mdw] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[ mdw] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[sdw2] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[sdw2] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[smdw] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[smdw] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[sdw4] ls -alF /usr/local/greenplum-db/greenplum_path.sh

[sdw4] -rw-r--r--. 1 gpadmin gpadmin 650 Aug 6 04:51 /usr/local/greenplum-db/greenplum_path.sh

[gpadmin@mdw:~]$

Creating the Data Storage Areas

Creating Data Storage Areas on the Master and Standby Master Hosts

To create the data directory location on the master

mkdir -p /data/master

chown gpadmin:gpadmin /data/master

source /usr/local/greenplum-db/greenplum_path.sh

gpssh -h smdw -e 'mkdir -p /data/master'

gpssh -h smdw -e 'chown gpadmin:gpadmin /data/master'

[root@mdw:~]# mkdir -p /data/master

[root@mdw:~]# chown gpadmin:gpadmin /data/master

[root@mdw:~]# source /usr/local/greenplum-db/greenplum_path.sh

[root@mdw:~]# gpssh -h smdw -e 'mkdir -p /data/master'

[smdw] mkdir -p /data/master

[root@mdw:~]# gpssh -h smdw -e 'chown gpadmin:gpadmin /data/master'

[smdw] chown gpadmin:gpadmin /data/master

[root@mdw:~]#

Creating Data Storage Areas on Segment Hosts

To create the data directory locations on all segment hosts

cat >> hostfile_gpssh_segonly << EOF

sdw1

sdw2

sdw3

sdw4

EOF

source /usr/local/greenplum-db/greenplum_path.sh

gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/primary'

gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/mirror'

gpssh -f hostfile_gpssh_segonly -e 'chown -R gpadmin /data/*'

[root@mdw:~]# cat >> hostfile_gpssh_segonly << EOF

> sdw1

> sdw2

> sdw3

> sdw4

> EOF

[root@mdw:~]# source /usr/local/greenplum-db/greenplum_path.sh

[root@mdw:~]# gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/primary'

[sdw2] mkdir -p /data/primary

[sdw1] mkdir -p /data/primary

[sdw3] mkdir -p /data/primary

[sdw4] mkdir -p /data/primary

[root@mdw:~]# gpssh -f hostfile_gpssh_segonly -e 'mkdir -p /data/mirror'

[sdw1] mkdir -p /data/mirror

[sdw4] mkdir -p /data/mirror

[sdw2] mkdir -p /data/mirror

[sdw3] mkdir -p /data/mirror

[root@mdw:~]# gpssh -f hostfile_gpssh_segonly -e 'chown -R gpadmin /data/*'

[sdw4] chown -R gpadmin /data/*

[sdw2] chown -R gpadmin /data/*

[sdw1] chown -R gpadmin /data/*

[sdw3] chown -R gpadmin /data/*

[root@mdw:~]#

Initializing a Greenplum Database System

Initializing Greenplum Database

Creating the Initialization Host File

- 다음 예제는 segment node 당 3개의 bonding 되지 않은 NIC 가 있다는 가정임

- load-balance 또는 fault-tolerant network 를 생성하기 위해서는 NIC bonding 추천

ssh gpadmin@mdw

cd ~

mkdir ~/gpconfigs

cd ~/gpconfigs

cat > hostfile_gpinitsystem << EOF

sdw1

sdw2

sdw3

sdw4

EOF

Creating the Greenplum Database Configuration File

# cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config ~/gpconfigs/gpinitsystem_config

cat > ~/gpconfigs/gpinitsystem_config << EOF

ARRAY_NAME="Greenplum Data Platform"

SEG_PREFIX=gpseg

PORT_BASE=6000

declare -a DATA_DIRECTORY=(/data/primary /data/primary)

MASTER_HOSTNAME=mdw.sky.local

MASTER_DIRECTORY=/data/master

MASTER_PORT=5432

TRUSTED_SHELL=ssh

CHECK_POINT_SEGMENTS=8

ENCODING=UNICODE

MIRROR_PORT_BASE=7000

declare -a MIRROR_DATA_DIRECTORY=(/data/mirror /data/mirror)

#DATABASE_NAME=name_of_database

#MACHINE_LIST_FILE=/home/gpadmin/gpconfigs/hostfile_gpinitsystem

EOF

Running the Initialization Utility

To run the initialization utility

cd ~

# gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile_gpinitsystem

gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile_gpinitsystem -s smdw --mirror-mode=spread

[gpadmin@mdw:~/gpconfigs]$ cd ~

[gpadmin@mdw:~]$ gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile_gpinitsystem -s smdw --mirror-mode=spread

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, please wait...

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Reading Greenplum configuration file gpconfigs/gpinitsystem_config

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Locale has not been set in gpconfigs/gpinitsystem_config, will set to default value

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Locale set to en_US.utf8

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates

20220828:18:44:11:020997 gpinitsystem:mdw:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250

20220828:18:44:12:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, Completed

20220828:18:44:12:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, please wait...

....

20220828:18:44:13:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Configuring build for standard array

20220828:18:44:13:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Sufficient hosts for spread mirroring request

20220828:18:44:13:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, Completed

20220828:18:44:13:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Building primary segment instance array, please wait...

........

20220828:18:44:18:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Building spread mirror array type , please wait...

........

20220828:18:44:22:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checking Master host

20220828:18:44:23:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, please wait...

................

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, Completed

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Database Creation Parameters

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:---------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master Configuration

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:---------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master instance name = Greenplum Data Platform

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master hostname = mdw.sky.local

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master port = 5432

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master instance dir = /data/master/gpseg-1

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master LOCALE = en_US.utf8

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum segment prefix = gpseg

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master Database =

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master connections = 250

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master buffers = 128000kB

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Segment connections = 750

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Segment buffers = 128000kB

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Checkpoint segments = 12

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Encoding = UNICODE

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Postgres param file = Off

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Initdb to be used = /usr/local/greenplum-db-6.21.1/bin/initdb

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-GP_LIBRARY_PATH is = /usr/local/greenplum-db-6.21.1/lib

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-HEAP_CHECKSUM is = on

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-HBA_HOSTNAMES is = 0

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Ulimit check = Passed

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Array host connect type = Single hostname per node

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master IP address [1] = ::1

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master IP address [2] = 192.168.181.231

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Master IP address [3] = fe80::3f77:4886:8cc0:25ba

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby Master = smdw

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Number of primary segments = 2

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby IP address = ::1

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby IP address = 192.168.181.232

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby IP address = fe80::1958:6310:7a95:7422

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby IP address = fe80::3f77:4886:8cc0:25ba

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby IP address = fe80::7934:f85b:a866:6599

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total Database segments = 8

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Trusted shell = ssh

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Number segment hosts = 4

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Mirror port base = 7000

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Number of mirror segments = 2

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Mirroring config = ON

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Mirroring type = Spread

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:----------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Primary Segment Configuration

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:----------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw1.sky.local 6000 sdw1 /data/primary/gpseg0 2

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw1.sky.local 6001 sdw1 /data/primary/gpseg1 3

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw2.sky.local 6000 sdw2 /data/primary/gpseg2 4

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw2.sky.local 6001 sdw2 /data/primary/gpseg3 5

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw3.sky.local 6000 sdw3 /data/primary/gpseg4 6

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw3.sky.local 6001 sdw3 /data/primary/gpseg5 7

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw4.sky.local 6000 sdw4 /data/primary/gpseg6 8

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw4.sky.local 6001 sdw4 /data/primary/gpseg7 9

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:---------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Mirror Segment Configuration

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:---------------------------------------

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw2.sky.local 7000 sdw2 /data/mirror/gpseg0 10

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw3.sky.local 7001 sdw3 /data/mirror/gpseg1 11

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw3.sky.local 7000 sdw3 /data/mirror/gpseg2 12

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw4.sky.local 7001 sdw4 /data/mirror/gpseg3 13

20220828:18:44:43:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw4.sky.local 7000 sdw4 /data/mirror/gpseg4 14

20220828:18:44:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw1.sky.local 7001 sdw1 /data/mirror/gpseg5 15

20220828:18:44:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw1.sky.local 7000 sdw1 /data/mirror/gpseg6 16

20220828:18:44:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-sdw2.sky.local 7001 sdw2 /data/mirror/gpseg7 17

Continue with Greenplum creation Yy|Nn (default=N):

> Y

20220828:18:44:51:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Building the Master instance database, please wait...

20220828:18:44:56:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Starting the Master in admin mode

20220828:18:44:57:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing parallel build of primary segment instances

20220828:18:44:57:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

........

20220828:18:44:57:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

......................

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Parallel process exit status

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as completed = 8

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as killed = 0

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as failed = 0

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Removing back out file

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-No errors generated from parallel processes

20220828:18:45:19:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Restarting the Greenplum instance in production mode

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Starting gpstop with args: -a -l /home/gpadmin/gpAdminLogs -m -d /data/master/gpseg-1

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Gathering information and validating the environment...

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 6.21.1 build commit:fff63ec5cc64f2adc033fc1203afbc5fbb9ad7d9 Open Source'

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Commencing Master instance shutdown with mode='smart'

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Master segment instance directory=/data/master/gpseg-1

20220828:18:45:19:028443 gpstop:mdw:gpadmin-[INFO]:-Stopping master segment and waiting for user connections to finish ...

server shutting down

20220828:18:45:20:028443 gpstop:mdw:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process

20220828:18:45:20:028443 gpstop:mdw:gpadmin-[INFO]:-Terminating processes for segment /data/master/gpseg-1

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Starting gpstart with args: -a -l /home/gpadmin/gpAdminLogs -d /data/master/gpseg-1

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Gathering information and validating the environment...

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 6.21.1 build commit:fff63ec5cc64f2adc033fc1203afbc5fbb9ad7d9 Open Source'

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Greenplum Catalog Version: '301908232'

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance in admin mode

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Obtaining Segment details from master...

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Setting new master era

20220828:18:45:20:028466 gpstart:mdw:gpadmin-[INFO]:-Master Started...

20220828:18:45:21:028466 gpstart:mdw:gpadmin-[INFO]:-Shutting down master

20220828:18:45:21:028466 gpstart:mdw:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

.

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Process results...

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:- Successful segment starts = 8

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:- Failed segment starts = 0

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Successfully started 8 of 8 segment instances

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-----------------------------------------------------

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance mdw.sky.local directory /data/master/gpseg-1

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Command pg_ctl reports Master mdw.sky.local instance active

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Connecting to dbname='template1' connect_timeout=15

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-No standby master configured. skipping...

20220828:18:45:23:028466 gpstart:mdw:gpadmin-[INFO]:-Database successfully started

20220828:18:45:23:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Completed restart of Greenplum instance in production mode

20220828:18:45:23:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing parallel build of mirror segment instances

20220828:18:45:23:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

........

20220828:18:45:23:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

...........

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Parallel process exit status

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as completed = 8

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as killed = 0

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as failed = 0

20220828:18:45:34:020997 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------

20220828:18:45:35:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Starting initialization of standby master smdw

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Validating environment and parameters for standby initialization...

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Checking for data directory /data/master/gpseg-1 on smdw

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master initialization parameters

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master hostname = mdw.sky.local

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master data directory = /data/master/gpseg-1

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master port = 5432

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master hostname = smdw

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master port = 5432

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master data directory = /data/master/gpseg-1

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum update system catalog = On

20220828:18:45:35:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Syncing Greenplum Database extensions to standby

20220828:18:45:36:030546 gpinitstandby:mdw:gpadmin-[INFO]:-The packages on smdw are consistent.

20220828:18:45:36:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Adding standby master to catalog...

20220828:18:45:36:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Database catalog updated successfully.

20220828:18:45:36:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Updating pg_hba.conf file...

20220828:18:45:38:030546 gpinitstandby:mdw:gpadmin-[INFO]:-pg_hba.conf files updated successfully.

20220828:18:45:39:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Starting standby master

20220828:18:45:39:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Checking if standby master is running on host: smdw in directory: /data/master/gpseg-1

20220828:18:45:43:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Cleaning up pg_hba.conf backup files...

20220828:18:45:44:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Backup files of pg_hba.conf cleaned up successfully.

20220828:18:45:44:030546 gpinitstandby:mdw:gpadmin-[INFO]:-Successfully created standby master on smdw

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Successfully completed standby master initialization

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Scanning utility log file for any warning messages

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[WARN]:-*******************************************************

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[WARN]:-Scan of log file indicates that some warnings or errors

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[WARN]:-were generated during the array creation

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Please review contents of log file

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-/home/gpadmin/gpAdminLogs/gpinitsystem_20220828.log

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-To determine level of criticality

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-These messages could be from a previous run of the utility

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-that was called today!

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[WARN]:-*******************************************************

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Database instance successfully created

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-To complete the environment configuration, please

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-update gpadmin .bashrc file with the following

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-2. Add "export MASTER_DATA_DIRECTORY=/data/master/gpseg-1"

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:- to access the Greenplum scripts for this instance:

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:- or, use -d /data/master/gpseg-1 option for the Greenplum scripts

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:- Example gpstate -d /data/master/gpseg-1

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Script log file = /home/gpadmin/gpAdminLogs/gpinitsystem_20220828.log

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Standby Master smdw has been configured

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-To activate the Standby Master Segment in the event of Master

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-failure review options for gpactivatestandby

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-The Master /data/master/gpseg-1/pg_hba.conf post gpinitsystem

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-new array must be explicitly added to this file

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-located in the /usr/local/greenplum-db-6.21.1/docs directory

20220828:18:45:44:020997 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

[gpadmin@mdw:~]$

Start

Restart

gpstop -r

pg_hba.conf 적용

gpstop -u

Stop

gpstop -M fast

Start

gpstart -a

Recovery

ssh gpadmin@smdw

cat > ${MASTER_DATA_DIRECTORY}/recovery.conf << EOF

standby_mode = 'on'

primary_conninfo = 'user=gpadmin host=mdw.sky.local port=5432 sslmode=prefer sslcompression=1 krbsrvname=postgres application_name=gp_walreceiver'

EOF

gpactivatestandby

reboot

for i in {6..1} ; do ssh 192.168.181.23${i} "reboot" ; done

shutdown

for i in {6..1} ; do ssh 192.168.181.23${i} "shutdown -h now" ; done

References